By Emily Litka

This is the second in a series of blog posts about the DOJ Rule regarding Access To U.S. Sensitive Personal Data and Government-Related Data by Countries of Concern or Covered Persons (the “DOJ Rule”). It provides an overview of one of the categories of data that is in scope under the DOJ Rule: bulk U.S. sensitive personal data.

The data types involved in a “data transaction” are critical to understanding if that transaction is covered by the DOJ Rule. One of the two categories of data covered by the DOJ Rule is “bulk U.S. sensitive personal data.” This blog post breaks down what data types constitute bulk U.S. sensitive personal data.

What is sensitive personal data under the DOJ Rule?

Bulk U.S. sensitive personal data consists of sensitive personal data that meets the bulk volumes defined in the DOJ Rule. There are six types of sensitive personal data under the DOJ Rule: (1) covered personal identifiers, (2) precise geolocation data, (3) biometric identifiers, (4) human `omic data, (5) personal health data, and (6) personal financial data. It also includes combinations of those types of data.

The definition of these data types can be broad and sweep in many types of personal data that have not traditionally been regulated as sensitive personal data under U.S. privacy or data security laws.

1. Covered personal identifiers: Covered personal identifiers consist of “listed identifiers” when they are in combination with any other listed identifier; or other data that is disclosed by a transacting party pursuant to the transaction such that the listed identifier is linked or linkable to other listed identifiers or to other sensitive personal data. The listed identifiers are any pieces of data in any of the following data fields:

Full or truncated government identification or account number (e.g., Social Security number, driver's license or State identification number, passport number, or Alien Registration Number)

Full financial account numbers or personal identification numbers associated with a financial institution or financial-services company

Device-based or hardware-based identifiers (e.g., Mobile Equipment Identity (“IMEI”), Media Access Control (“MAC”) address, or Subscriber Identity Module (“SIM”) card number)

Network-based identifiers (e.g., Internet Protocol (“IP”) address or cookie data)

Demographic or contact data (e.g., first and last name, birth date, birthplace, ZIP code, residential street or postal address, phone number, email address, or similar public account identifiers)

Advertising identifiers (e.g., Google Advertising ID, Apple ID for Advertisers, or other mobile advertising ID (“MAID”))

Account-authentication data (e.g., as account username, account password, or an answer to security questions)

Call-detail data (e.g., Customer Proprietary Network Information (“CPNI”))

As noted above, there are two ways that a “listed identifier” could become a covered personal identifier and in scope under the DOJ Rule. First, where a listed identifier is disclosed in combination with other listed identifiers. For example, where a MAID as a type of advertising identifier and MAC address as a type of device or hardware-based identifier are disclosed together, then a covered personal identifier is disclosed. This involves pieces of data from two different data fields within the listed identifier definition (advertising identifiers and device or hardware-based identifiers). A MAC address along with a SIM card number would also likely be a covered personal identifier since that consists of two different pieces of data from a field in the listed identifier definition (here, both are device-based or hardware-based identifiers). This latter example would likely be included since the definition of listed identifier picks up any piece of data from any of the data fields it notes. In contrast, where a MAC address is disclosed in isolation, it wouldn’t be a covered personal identifier because it would only constitute a single listed identifier.

Second, where a listed identifier is disclosed in combination with other sensitive personal data under the DOJ Rule, making the listed identifier is reasonably linkable to the individual and their sensitive personal data. For example, where a residential address (a demographic or contact data type of listed identifier) is disclosed in combination with an individual’s medical condition.

The term covered personal identifiers maintains two exceptions. First, where demographic or contact data is linked only to other demographic or contact data (e.g., first and last name, birthplace, ZIP code, residential street or postal address, phone number, and email address and similar public account identifiers). Second, where a network-based identifier, account-authentication data, or call-detail data is linked only to other network-based identifier, account-authentication data, or call-detail data and are necessary for the provision of telecommunications, networking, or similar service.

Despite falling under the “sensitive data” umbrella definition of the DOJ Rule, the term covered personal identifiers is broad and many categories of data under it are likely be processed by companies that routinely offer websites and mobile apps.

2. Precise geolocation data: Precise geolocation data is data that identifies the physical location of an individual or a device with a precision of within 1,000 meters, either in real time or that discloses an individual’s past location. This definition includes geolocation data with less precision than state privacy laws that commonly require accuracy of 1,750 feet (or ~ 536 meters).

3. Biometric identifiers: Biometric identifiers are measurable characteristics or behaviors about an individual used to recognize them or verify their identity. This includes facial images, voice prints and patterns, retina and iris scans, palm prints and fingerprints, gait, and keyboard usage patterns that are enrolled in a biometric system and the templates created by the system. The DOJ Rule does not define “biometric system” or “templates.” It’s also unclear at this time whether the condition that data be “enrolled in a biometric system” and that a template can be created from it applies only to “keyboard usage patterns” or to all the listed data.

4. Human `omic data: Human `omic data includes human genomic data, human epigenomic data, human proteomic data, and human transcriptomic data. At a high level, these categories cover biological sequences of the genetic data (e.g., the result or results of a genetic test) and systems-level analysis of proteins, genes, and DNA and RNA.

5. Personal health data: Personal health data is data that indicates, reveals, or describes an individual’s past, present, or future physical or mental health or condition; the provision of healthcare to an individual; or the past, present, or future payment for the provision of healthcare to an individual. It includes vital signs, symptoms, and allergies; social, psychological, behavioral, and medical diagnostic, intervention, and treatment history; test results; logs of exercise habits; immunization data; data on reproductive and sexual health; and data on the use or purchase of prescribed medications. Personal health data is not limited to data collected only by healthcare and medical professionals, it broadly applies to any entity that collects or holds such data (e.g., a fitness mobile app). This definition is broad and follows the trend that state laws are increasingly taking, and which could arguably include even clickstream data from websites that relate to health conditions and medication use.

6. Personal financial data: Personal financial data is about an individual’s credit, bank account, purchase and payment history, assets, debts, financial statements, and data in a credit report. The FAQs emphasize that this definition does not apply only to data collected by financial institutions, it broadly applies to all purchase and payment history (e.g., a record of purchases by an individual using an e-commerce site). It does not, however, include inferences made from personal financial data (e.g., an inference that a person is interested in business travel from their hotel record is not, alone, personal financial data).

What if it is anonymized or de-identified?

Data is sensitive personal data under the DOJ rule even if it is anonymized, pseudonymized, de-identified, or encrypted. These data protection techniques are immaterial to determining whether the data is sensitive personal data. Other techniques like tokenization also may not be sufficient to remove data’s “sensitive” classification under the DOJ Rule. Unlike many data privacy laws, the DOJ Rule does not consider whether data is linked or reasonably linkable to a specific individual before it is classified as sensitive. This is likely because the DOJ Rule is a national security regulation that focuses on different risks and policy objectives. Accordingly, it’s best to think of any individual-level or device-level data as potentially in-scope for the DOJ Rule if it corresponds to one of the types of sensitive personal data.

What data is excluded from sensitive personal data?

Sensitive personal data does not include data that does not relate to an individual. The DOJ Rule excludes the following types of data from the definition:

trade secret data (as defined in 18 U.S.C. 1839(3)) or “proprietary information” (as defined in 50 U.S.C. 1708(d)(7);

data that is, at the time of the transaction, lawfully available to the public from a Federal, State, or local government record (such as court records) or in widely distributed media (such as sources that are generally available to the public through unrestricted and open-access repositories);

information or informational materials and ordinarily associated metadata or metadata reasonably necessary to enable the transmission or dissemination of such information or informational materials.

When is it considered “bulk”?

The DOJ Rule prescribes bulk thresholds for when the disclosure of sensitive personal data will be in scope. The thresholds must be calculated on a rolling 12-month basis, disclosed through a single covered data transaction or aggregated across covered data transactions involving the same U.S. person and the same foreign person or covered person. The date for the preceding 12 months should look back to April 8, 2025, the effective date of the DOJ Rule.

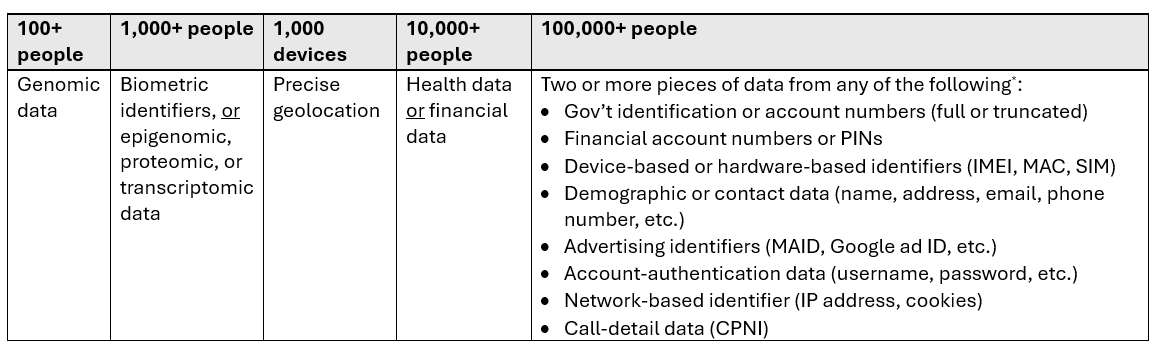

The bulk thresholds are:

Human genomic data: 100 U.S. persons

Human epigenomic data, human proteomic data, human transcriptomic data, biometric identifiers, and precise geolocation data: 1,000 U.S. persons

Personal health data or personal financial data: 10,000 U.S. persons

Covered personal identifiers: 100,000 U.S. persons

It also includes data combinations from the categories above where the combined data meets the lowest bulk threshold listed above.

The table below summarizes these sensitive data types and volumes:

As this post illustrates, the types of data the DOJ Rule classifies as sensitive personal data include many data types classified as sensitive under other state and federal privacy laws, as well as many types of data that are not classified as sensitive under other U.S. laws. This broad definition will pick up many types of personal data that companies and organizations traditionally have not viewed as sensitive, as well as types that may not be subject to robust data governance or data protection protocols.

As noted above, in the coming weeks we will discuss other aspects of the DOJ Rule and the issues it raises. In the next post, we will look at another element that can determines whether the law may apply to your business – government-related data.

Takeaways From the New DOJ Guidance on Its Cross-Border Data Rule — Hintze Law

New U.S. Regulations Impose Significant Restrictions on Cross-Border Data Flows — Hintze Law

Hintze Law PLLC is a Chambers-ranked and Legal 500-recognized, boutique law firm that provides counseling exclusively on global privacy, data security, and AI law. Its attorneys and data consultants support technology, ecommerce, advertising, media, retail, healthcare, and mobile companies, organizations, and industry associations in all aspects of privacy, data security, and AI law.

Emily Litka is a Senior Associate at Hintze Law PLLC, focusing her practice on global privacy and emerging AI laws and regulations.